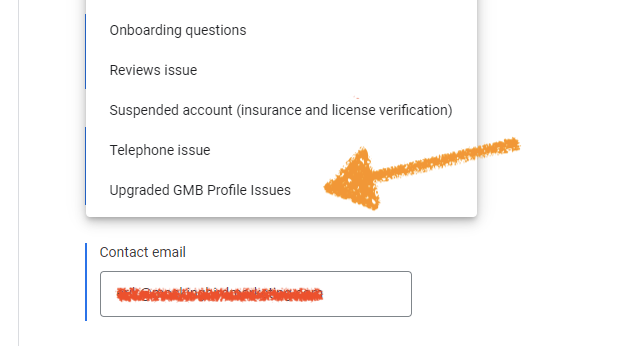

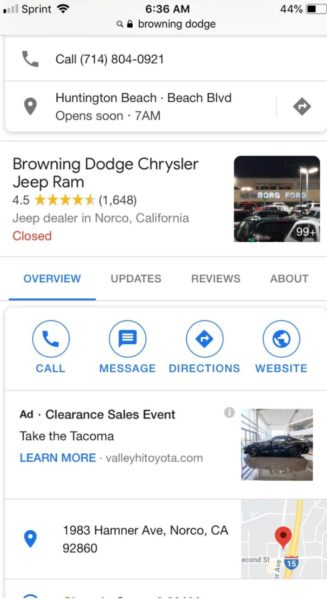

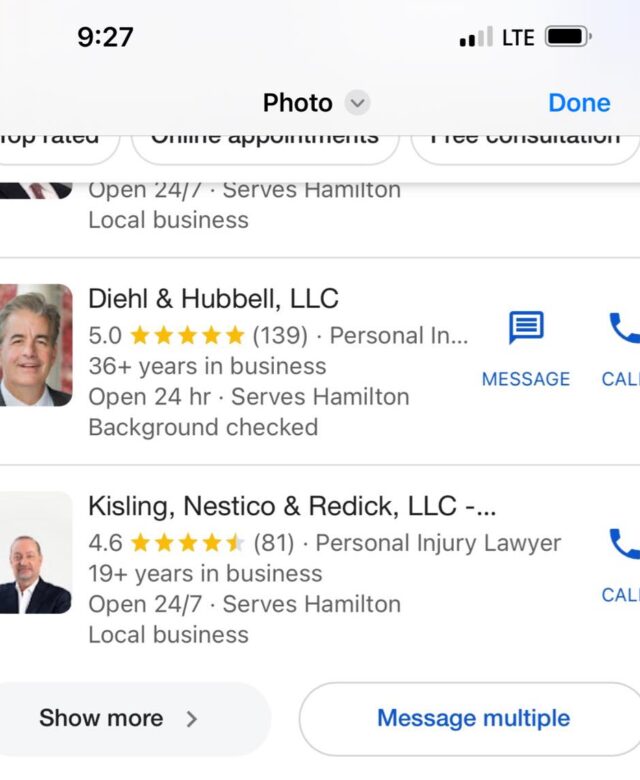

Google’s Local Service Ads are now enabling a feature to let the end user message multiple law firms. See the image to the right “Message multiple” for an example. Google now looks exactly like the aggressive and often anonymized lead generation companies who sell single leads to multiple firms. The catch here is that due to the opacity in reporting in LSAs… the law firm has NO idea not only how many other firms received the lead, but also no idea on how much they are paying per lead. And if history repeats itself (see below), it’s highly likely that law firms are paying very similar amounts for the phone call that goes exclusively to their law firm as they are, for a message lead that gets simultaneously submitted to three competitors in the same market.

Google’s Local Service Ads are now enabling a feature to let the end user message multiple law firms. See the image to the right “Message multiple” for an example. Google now looks exactly like the aggressive and often anonymized lead generation companies who sell single leads to multiple firms. The catch here is that due to the opacity in reporting in LSAs… the law firm has NO idea not only how many other firms received the lead, but also no idea on how much they are paying per lead. And if history repeats itself (see below), it’s highly likely that law firms are paying very similar amounts for the phone call that goes exclusively to their law firm as they are, for a message lead that gets simultaneously submitted to three competitors in the same market.

Hat tip to Josh Hodges for the heads up on this.

Some Recent History of Google’s LSA money grab….

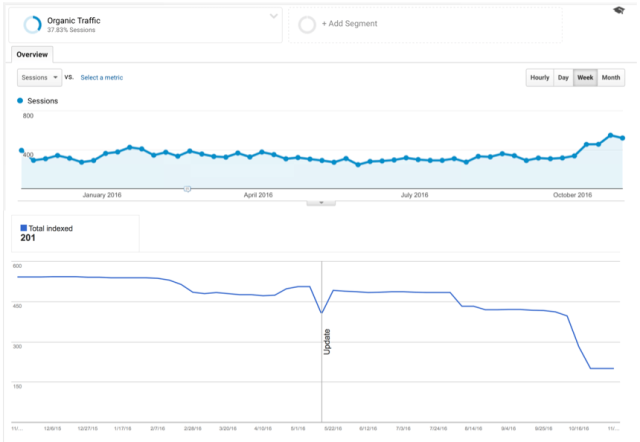

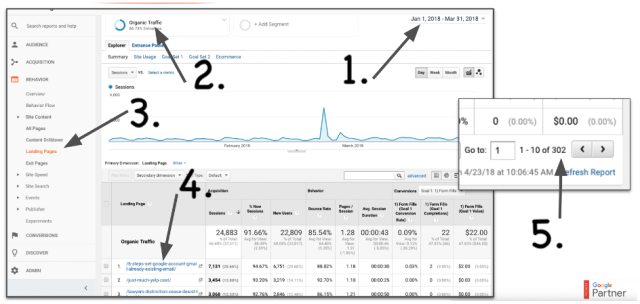

On February 12th of this year, Google rolled out (and automatically opted all advertisers in) a product called Direct Business Search. Listeners of the Lunch Hour Legal Marketing Pod will know that I’ve been highly skeptical of them because of Google’s opacity in data on LSA performance – put differently, PPC rates for branded campaigns (“Smith and Jones Law Firm”) run (across our client base) $3.41 per click, compared to non branded campaigns (“car accident lawyer Cleveland”) which are much more expensive. Because Google offers no granularity on the reporting; it’s very difficult to hone in on what Google is charging for those branded queries. We’ve done some very blunt analysis on this, and in conjunction with some internal law firm studies have come to the conclusion, it’s about $150-$200 for branded terms – a 50x increase on what you’d pay in PPC. As such we’ve opted clients out of these. (Now the counterpoint, which Gyi raises in the latest LHLM podcast is… does opting out of branded get you kicked out of the non-branded… another “what if” that’s impossible to answer without more granular data.) If you want to listen to the full discussion on the pod – have a listen: Google Local Service Ads: To Brand or Not To Brand.

Squeezing the Legal Industry

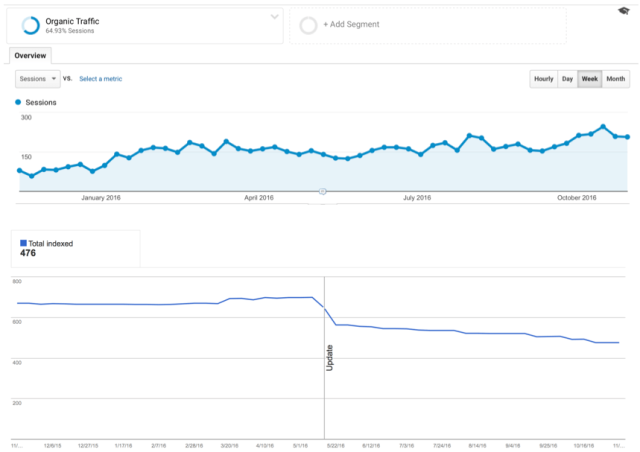

Google’s pattern of behavior in conflating three very different types of advertising models (brand search, lead gen and direct response) ultimately leads to more law firms spending more money on a marketing channel that is deliberately designed to be economically inefficient. Note they did this in PPC as well – with close variance between branded terms and non branded ones – for example conflating “Morgan and Morgan” with “car accident lawyer”. This has increased the overall PPC spend w/o generating more clients for lawyers. Put differently: this change in LSAs is a reflection of Google finding more ways to squeeze more money out of law firms without providing incremental value.

What Should I Do About It?

Watch your economics carefully. Any testing you’d like to do is going to be very blunt – i.e. turn off messaging for two weeks, then opt out of branded keywords for two weeks and then try to compare the economics (cost per consult, not cost per lead) across these very broad tests taken during different time periods. With Google’s refusing to provide any insight into what makes up your overall LSA spend, this is the only way to try to get a handle on how LSAs are performing for your firm.