FindLaw has a history of tying up their clients in contractual and technical knots, so I shouldn’t be surprised at their latest technical entanglement.

I’ve been quiet about FindLaw for a few years now. The tales of their dirty tricks have faded: registering domains to themselves, overpriced social media tools, data hijacking, the delightful linkselling scandal, and the abhorrently gross practice of having clients pay to SEO a site only to later resell that site to a competitor: FindLaw Selling Pre-SEO’d Websites. Years ago, in a fit of annoyance, I penned The FindLaw Jailbreak Guide and watched my website explode with traffic from Eagen, Minnesota.

The latest from FindLaw is likewise brazen, obnoxious and self serving. Business models predicated on entrapping clients make me retch. One of those previous tricks in the FindLaw arsenal that artificially tied customers to them, was the use of the proprietary website platform – a website built on a backend that no one other than FindLaw could work on. This made sites time consuming and expensive to maintain, as updates had to flow through FindLaw. It was also difficult to leave, despite the overpriced monthly charges, as sites needed to be rebuilt from scratch which is an expensive endeavor. Over the years, the legal industry finally caught on to these technical handcuffs, opting for the freedom of the widely utilized WordPress instead. And then, back in February of 2018 a little birdie whispered in my ear that FindLaw was moving to WordPress, leaving Scorpion as the only big box legal provider with a proprietary platform. I surmised in a blog post that FindLaw had to move as they were losing clients who preferred the freedom, flexibility and performance of the widely-adopted WordPress platform:

Having spoken to perhaps a hundred FindLaw clients in detail over the past decade, the long term, captive nature of the proprietary platform and contracts is something clients resent. No one wants to be beholden to a vendor, especially when cheaper, better alternatives exist. This has been, perhaps, the primary reason we’ve easily been able to score deals with FindLaw clients.

So three years ago, FindLaw finally evolved to a customer centric, WordPress based approach! Hold the phone…

Fast forward to a 2021 and law firms are starting to age out of long term FindLaw contracts for their new WordPress website. Some have started looking for new vendors. Now generally, transitions of a website from one agency to another look like this: client uses their WordPress admin account to create a login. Hits send. It takes a solid 90 seconds, access to the Internet and the technical acumen of one of my pet chickens. In moments, the new agency or contractor or in-house marketer or precociously nerdy teenager of one of the partners can work on the legacy site. This simplicity of transferability firmly puts the law firm in control of their site (instead of their vendor) and is one of the prime reasons why WordPress is superior to proprietary platforms.

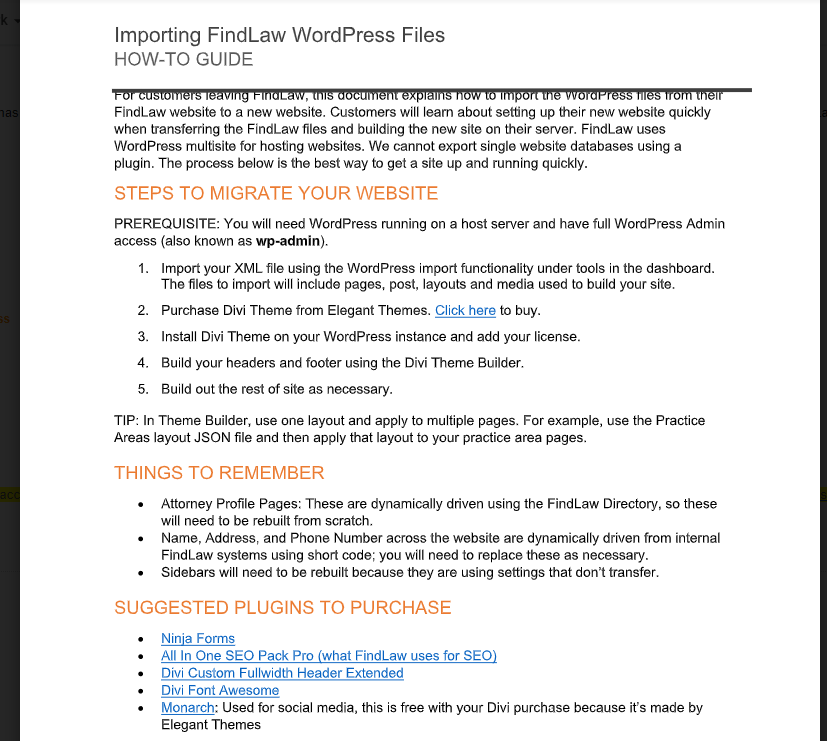

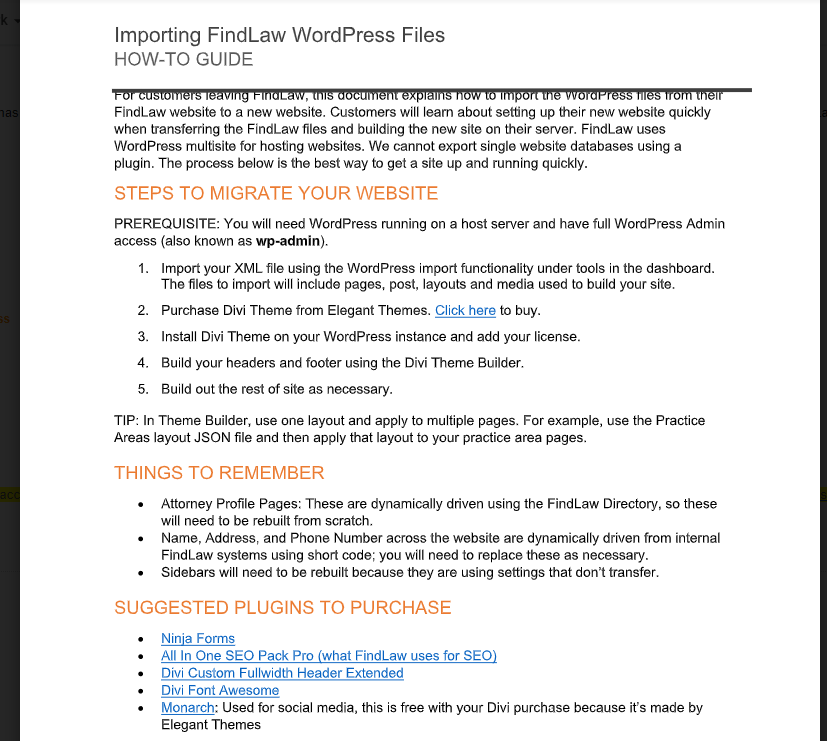

But not with FindLaw’s WordPress sites, which have been pompously, cynically and deliberately designed to make the transition process painful, expensive and time consuming. Once law firm clients decide they’ve had enough and want to move on, FindLaw’s “don’t let the door hit you on the way out” is best embodied in a FindLaw document entitled “Importing FindLaw WordPress Files How-To Guide” which goes to great pains to demonstrate just how annoying, painful, time-consuming, technical and expensive the transition process is going to be. (And I’ll bet you a bottle of scotch, FindLaw sales staff doesn’t share this file when they are peddling websites through cold calling outreach.)

Let’s dig into some of the specifics:

Let’s dig into some of the specifics:

- Expenditures – Customers are directed to purchase 6 different themes and plug-ins in order to make their ex-FindLaw WordPress site functional.

- Content – Much of the content on FindLaw WordPress sites is syndicated directly from the FindLaw directory including the lawyer profile. “Attorney Profile Pages are dynamically driven using the FindLaw Directory, so these will need to be created from scratch.” Beyond the annoyance of rebuilding a standard page type, take a moment to consider the SEO implications of this tactic. FindLaw syndicates content from their own directory to their clients’ website causing a massive duplicate content problem and SEO conflict with themselves vs. their clients on arguably the firm’s most valuable, the Attorney Profile Page.

- Technical Talk – not scared off yet? Try this on for size for the average J.D. “In Theme Builder, use one layout and apply to multiple pages. For example, use the Practice Areas layout JSON file and then apply that layout to your practice area page.” How about, “Sidebars will need to be rebuilt because they are using settings that don’t transfer.” And my favorite is #5 in the Steps to Migrate Your Website process: “Build out the rest of site as necessary.” Still feel like you can “get a site up and running quickly”?

- Divi Builder – This will be lost on almost all lawyers, but Divi-Builder is widely panned among high end developers, especially when compared to the robustness and cleanliness of other available themes. Divi is built for non-technical beginners who want to customize design without needing to code, instead of experts who want full clean control. A comparative of Divi vs. Elementor page builder sums it up: “Get Divi if you are a solopreneur with 1 Website“. And while this may describe many lawyers, it is certainly not what to expect when buying from an established vendor. The fact that FindLaw use the coding equivalent of paint-by-numbers for their WordPress platform is a huge red flag.

- Sardonic Irony – “Customers will learn about setting up their new website quickly when transferring the FindLaw files and building the new site on their server… the process below is the best way to get a site up and running quickly.” Those are my emphases, but I can’t help but be gripped by the intentional sardonic irony of the author who knows damn well that “building the new site” isn’t “quick”; especially when considering the alternative of just handing over log-ins to a WordPress site – like every other reasonable agency in the world does.

Under the guise of being a helpful how-to, this document is actually a warning shot regarding the cost, the pain and the forthcoming nightmare for an attorney leaving their Findlaw website; an MBA’s most pathetic, craven approach to customer retention.

Costs of “Migrating” a FindLaw WordPress Website

So what does this mean for a law firm leaving FindLaw for a new agency? Noting that Mockingbird is not necessarily the cheapest alternative, there are basically three options:

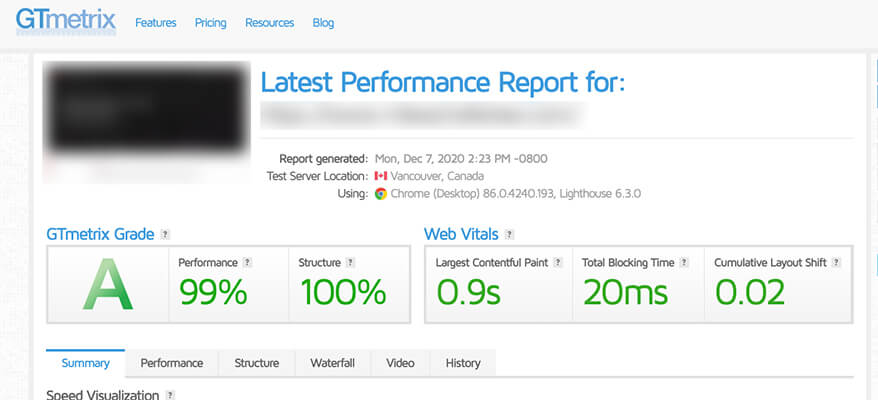

- Rebuild the site per FindLaw How-To Directives. I asked my web dev team to estimate costs for rebuilding the site to the existing look and feel and functionality of the FindLaw site while following the How-To Guide. These costs include third party expenditures, coding time to”build” the site and pre-launch testing protocol – rough estimate of $6-$11K. This, for a very very rudimentary, cookie-cutter site with generic design.

- Replace the FindLaw Template with our Echo Template. Many of our smaller clients use our simple WordPress template called Echo, which enables us to get sites up very quickly and at low cost. Say goodbye to the divi theme. Echo is a modular, fast, cost-effective template and costs $200/month. (For only 24 months – I don’t believe in that gross practice of the perpetual ongoing monthly drip out of your bank account – yet another example of scheming MBA’s putting business models ahead of their customers.)

- Genuinely Customized Site – If we are rebuilding from scratch, what would a fully bespoke site look like? Cost estimates range from $12-$30K depending on our starting point, the complexity and age of the legacy code, content map, design customizations etc. In this case, I wouldn’t recommend this third option as the firm in question is small and should spend more of their budget driving traffic to a good-enough website instead of optimizing conversion with custom design for a site already getting a large volume of traffic.

Stay with FindLaw

Don’t forget, since we started in 2013, the total amount Mockingbird has charged clients for switching to us from another agency running a WordPress site is: $0.

When FindLaw moved to the widely adopted WordPress platform, they went out of their way to use technology to systemically ensnare their customers. Their explanation “FindLaw uses WordPress multisite for hosting websites. We cannot export single website databases…” exposes their true intention. They deliberately selected a technology platform that, just like the old, inefficient proprietary code, makes the switching costs high enough that a few miserable attorneys won’t leave. I’m left with this thought – if FindLaw had spent the same amount of effort building an amazing product as they did in entrapping their clients with technology, perhaps their clients wouldn’t be so eager to leave.

Smart Lawyers don’t hire agencies that trap them.

![]()

![]()

1) Miller’s Law

1) Miller’s Law 2) Peak-End Rule

2) Peak-End Rule 3) Serial Position Effect

3) Serial Position Effect 1) Law of Proximity

1) Law of Proximity 2) Law of Similarity

2) Law of Similarity 3) Law of Uniform Connectedness

3) Law of Uniform Connectedness 1) Aesthetic Usability Effect

1) Aesthetic Usability Effect