Title tags and meta descriptions. One of the first additions to any new SEO’s on-page optimization arsenal. Although simple, it’s important to have a strong understanding of what titles and descriptions are, why you should use them, and how to optimize them in order to get the most cost-effective means of SEO improvement.

What Are Title Tags and Meta Descriptions?

Title Tags

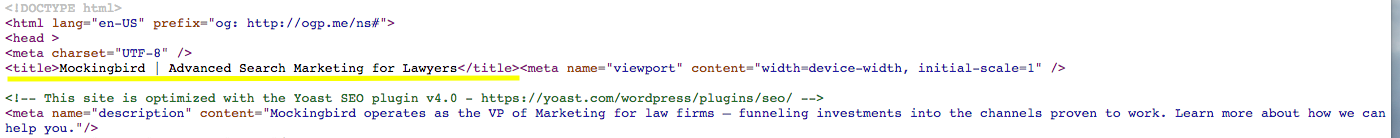

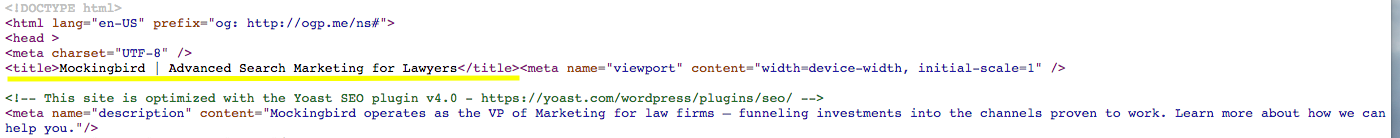

A title tag is an HTML element included in the <head> section of a page on a website. To read a page’s title tag, right click anywhere on a page and click “view page source”. The title tag is the text between “<title>” and “</title>” (believe it or not):

Go ahead and give it a try on this page!

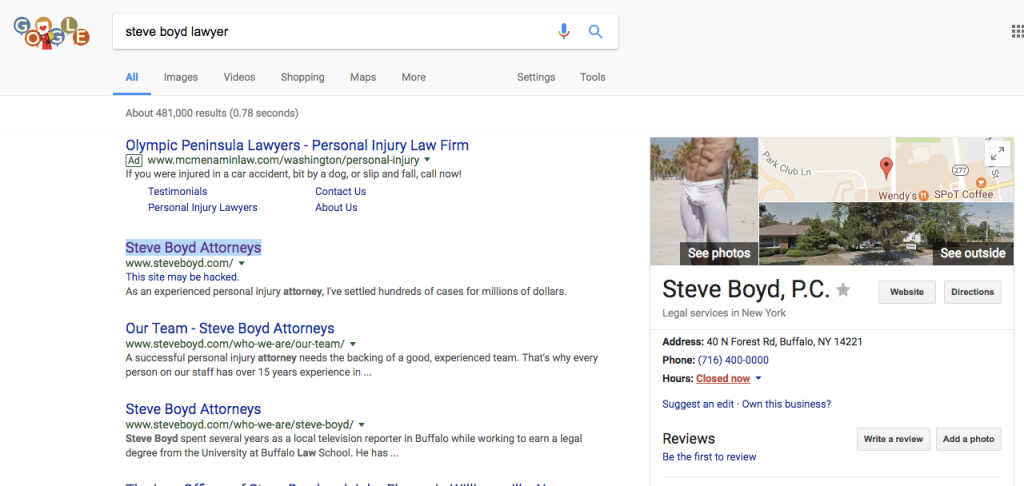

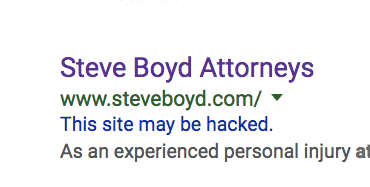

The title tag never actually appears on the page itself. It gives search engines a boiled down description of what a page is about. According Moz’s 2015 search engine ranking factors survey, title tags are still one of the most important on-page ranking factors. Title tags are helpful for search engines, and they’re helpful for users. When a user performs a search for “Mockingbird Marketing”, this is what shows up:

The title tag added to a page (in this case, home page) is the first thing a user sees when they come across a website in the search results. For obvious reasons, you want this text to be inviting, informative, and accurate.

But search results aren’t the only place users encounter your title tag. Title tags show up in the text displayed on your browser tab:

and in social media:

Meta Descriptions

A meta description, similar to a title tag, is an HTML element that tells the users what a page is about. It too, can be found in the <head> section of a page:

.

.

Meta descriptions, although not as big and bold as title tags in search results, provide users with a more detailed description of what a page is about. This text is found directly below the title in search results.

Why Should I Use Title Tags and Meta Descriptions?

There are two reasons to make sure that each page on your site has optimized title tags and descriptions:

- For search engines

- For users

Of (1), it’s unclear the extent to which this helps. In the good old days, Google would take a page’s title tag and use that as a primary ranking factor. Since then, search engines have added a multitude of ranking factors to consider alongside meta tags, reducing their clout. Currently, the exact influence of a title tag on page ranking is unclear.

Google has been more clear on meta descriptions. Matt Cutts of Google said in 2009 that meta descriptions are not used as ranking factors.

Of (2), this is where the definitive value of optimizing title tags and meta descriptions lies. Giving your pages clear titles and descriptions draws in the user. If a user comes across the title of your page in search and it does a good job of describing exactly what the content within the page is about, the user will click, and stay, on your page.

How Do You Optimize Title Tags and Meta Descriptions?

There are a couple things to keep in mind as you optimize your title tags and descriptions.

- Length: Google will display the first 50-60 characters of your title tag. Keep your title within this length to ensure nothing gets cut off. Meta descriptions should fall between 150 and 160 characters.

- Keep Users in Mind: Spamming meta tags with keywords looks suspicious to search engines and users. When writing meta tags for a page, first go through the page and make sure you have a strong understanding of what the page is about. Boil this down to title tag and meta description length.

- Important Keywords First: As users scan a page filled with search results, their eye starts on the left side of the page. Place the most relevant words early in your title tag.

- Never repeat: duplicate titles and descriptions confuse everybody, search engines and users alike.

There You Have it

To see how all of this fits in to the bigger picture, check out ahrefs’ guide to on-page SEO. This study does a good job of showing how much of an impact meta tags have on your on-page SEO.