If your site runs on WordPress, it is highly possible – even likely – that your site needs a diet. WordPress makes it mind-numbingly easy to create lots of different pages by recycling your content, or snippets of your content, into various related pages. This has been grossly exacerbated by uninformed SEO consultants pushing their clients to aggressively “tag” blog posts.

WordPress Often Generates Too Many Pages

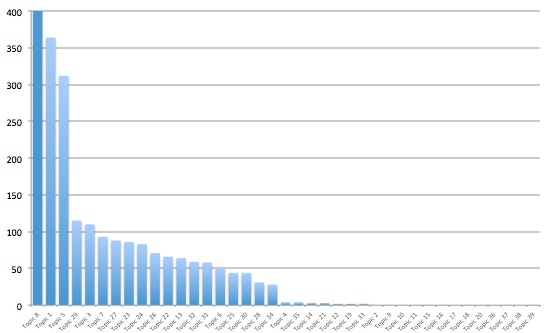

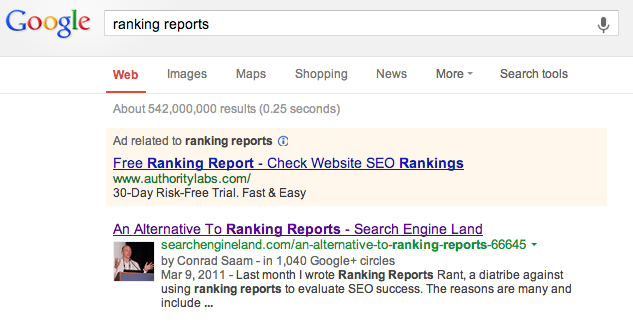

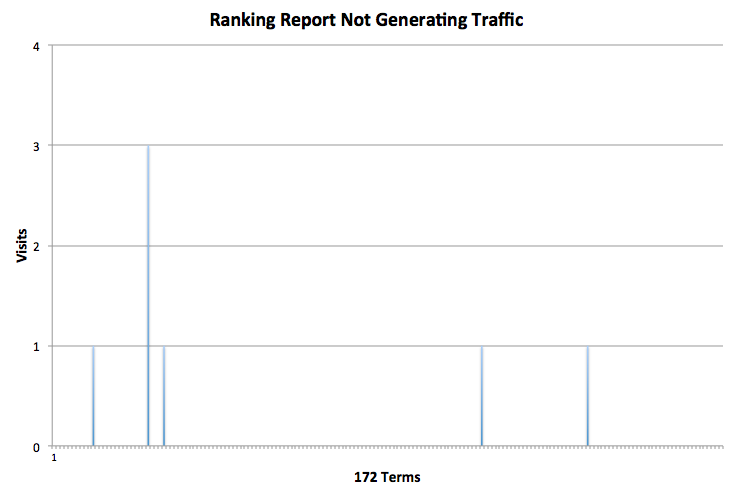

First, understand that search engines don’t necessarily review all of the pages on a site, but instead use the site’s authority (from links etc.) to determine just how many pages they will both crawl (find) and index (add to the consideration set for search results). Therefore, sites with low authority and lots of pages may find that most of their pages receive zero traffic and aren’t ever seen by search engines.

Let’s use Atticus Marketing as an example to showcase why all of these extra pages are problematic. Yesterday, I published a great post on the differences between three mainstream CMS systems. Not only have the search engines failed to send any traffic to my lovely content, they haven’t even indexed or even crawled it at all! They don’t know it exists. This despite the fact that I’m doing all of the social media marketing: posts on LinkedIn, Facebook, Google Plus, Tweets and Retweets.

Here’s why: Atticus is a very young site, with just 34 different sites linking to it AND I’ve built out lots of extra pages through WordPress’s Categories and Tagging functionality. Every time you create a category or a tag, WordPress generates a page to organize content with that category or tag. These pages are optimized for the category/tag. This functionality has the capacity to generate lots of pages with content that already has a home on your site – duplicate content that search engines eschew. (Note that tags are worse because the interface is very freeform, encouraging writers to generate multiple versions of similar tags). You can see how this gets out of control: on AtticusMarketing.com I have a paltry 10 pages and 25 blog posts – yet Google indexes 186 different pages for the site. The reality is, the vast majority of these pages just contain content that exists elsewhere on the site.

The reason the search engines haven’t deigned to even look at my lovely new content is because my site’s authority and multiple duplicate content pages combine to convince them that much of my content just isn’t worth their time.

Tagging and SPAM

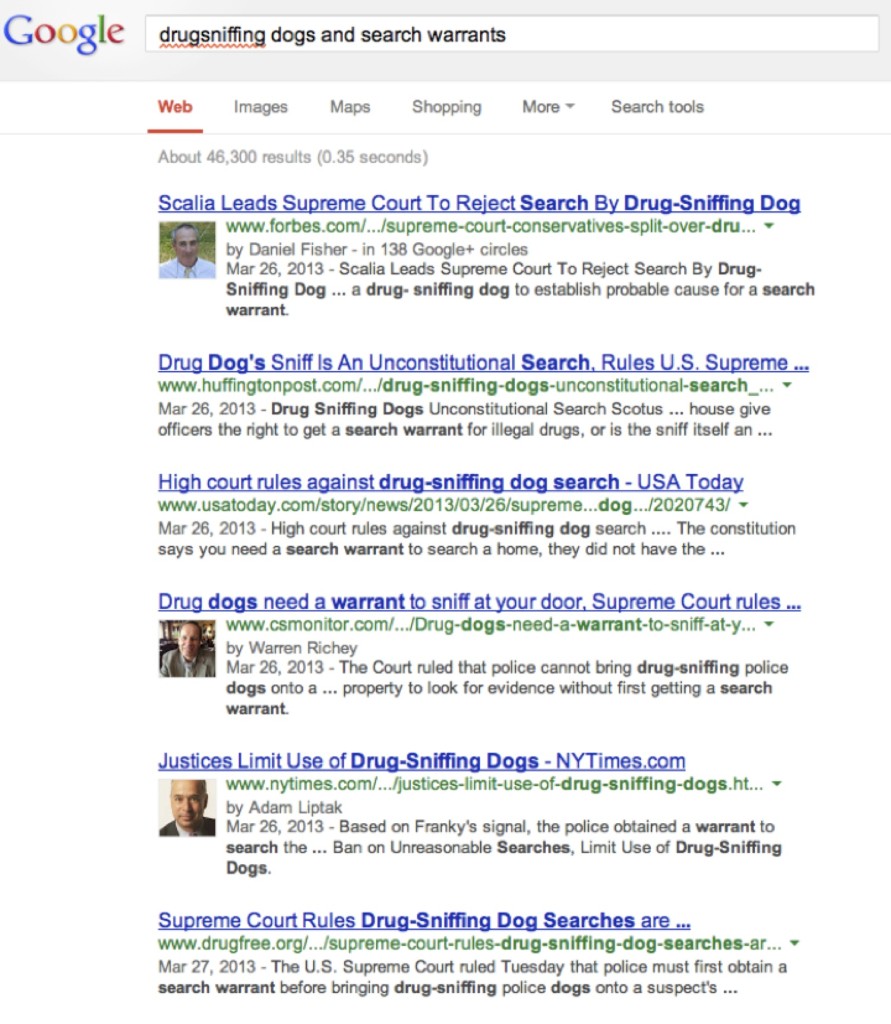

Look at this from a search engine perspective, to understand why a combination of page volume and site authority determines how many pages are reviewed. Let’s review a more extreme example, from the Carter Law Firm, where a site utilizes WordPress tagging functionality to generate a litany of spammy pages.

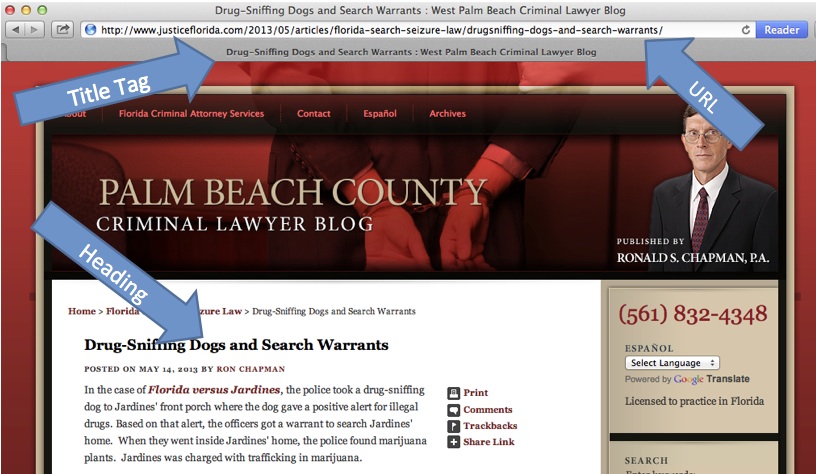

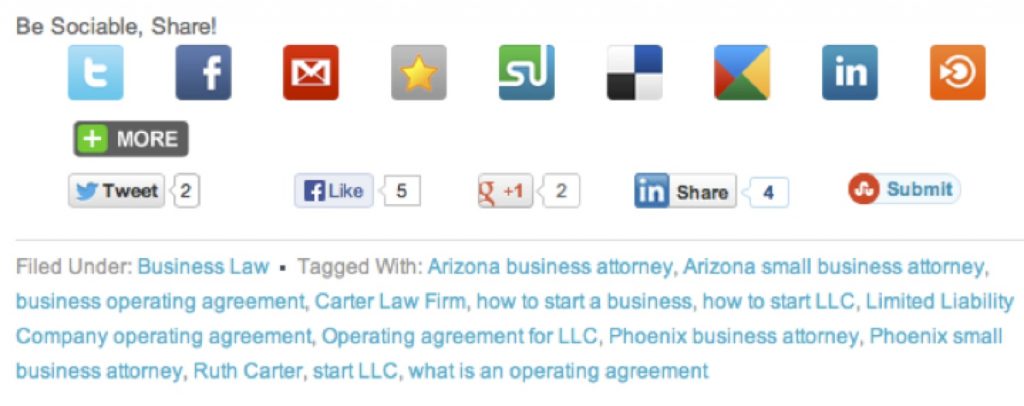

The blog is well written, has some beautiful imagery, appropriately utilizes external and internal links and embraces edgy topics including Topless Day and revenge porn. Unfortunately at the end of every single post is a long list of entries for both “Filed Under” i.e. categories and “Tagged With” i.e. tags. Here’s the entry for the post on “Ask the Hard Questions Before Starting a Business”:

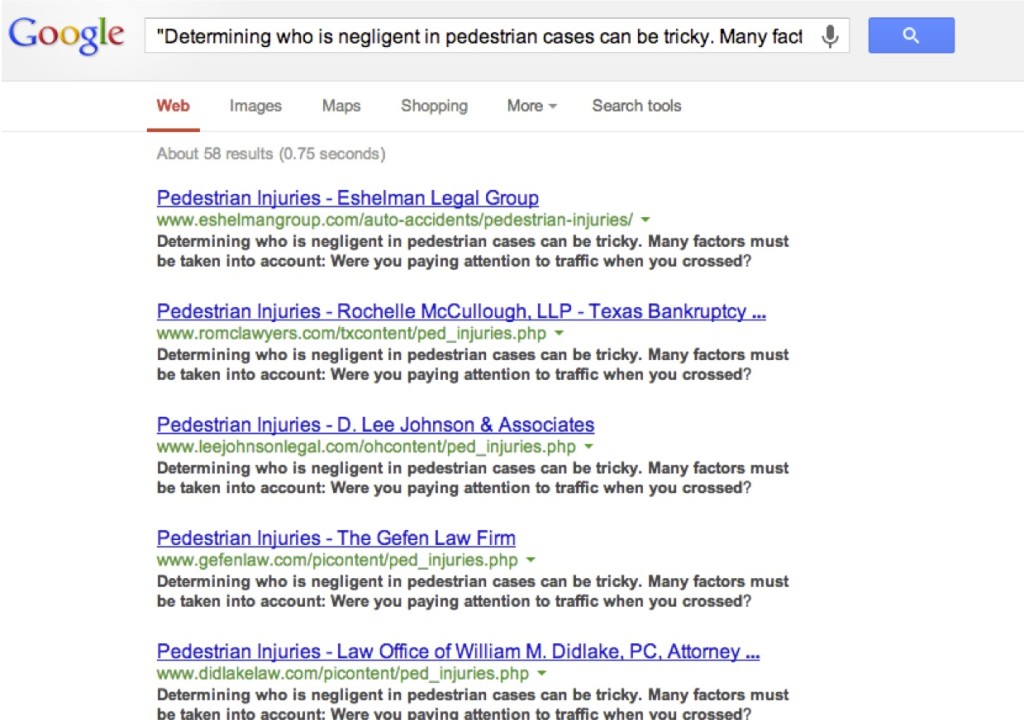

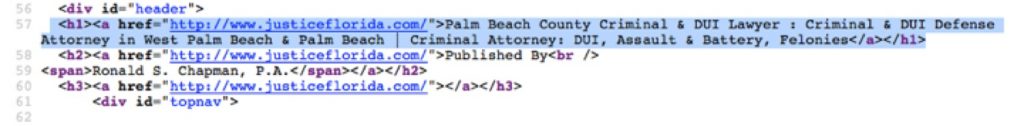

This one piece of (very good) content is now going to be replicated on 15 different pages across her domain – most of which will have nothing but a verbatim copy of this content. And many of these pages are “optimized” (I use the term very loosely here – but optimized with on-page elements like Title Tags, H1s, URL etc.) for very similar content:

- “How to start a business” vs. “How to start LLC” vs. “start LLC”.

- “Arizona business attorney” vs. “Arizona small business attorney” vs. “Phoenix business attorney” vs. “Phoenix small business attorney”.

- “Business operating agreement” vs. “Limited Liability Company operating agreement” vs. “Operating agreement for LLC” vs. “what is an operating agreement”.

This is a content spam tactic intended to capture variants of long tail search queries. In reality, search engines figured this out years ago and the site owner is doing nothing other than artificially inflating her page count – most likely to the detriment of her search performance.

How to Avoid These Problems

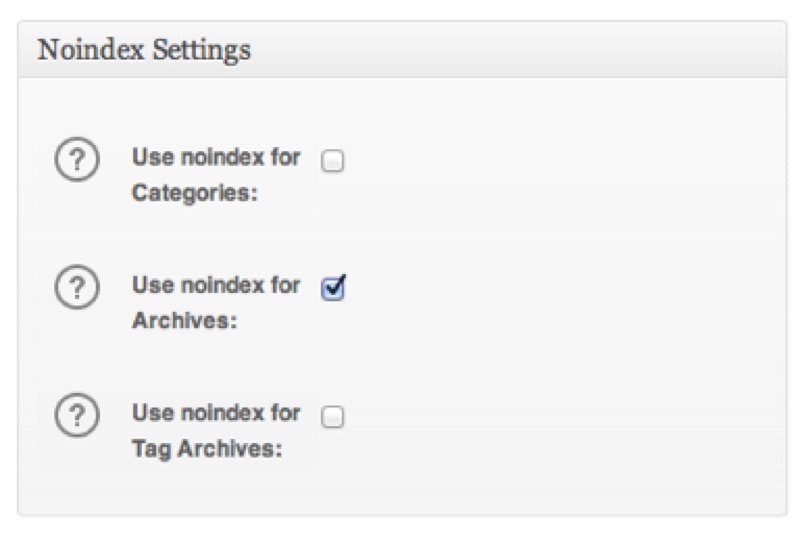

Personally, I enjoy the tag clouds that are generated by tagging my posts and there is definitely a user benefit of being able to see articles grouped along common threads. To use tags and avoid an inflated page count, simply Noindex your Tags. (Be careful about noindexing your categories, to make sure your URL structure for your posts doesn’t include the category folder.) The Yoast SEO plug in has simple check boxes for this, as does the All-In-One SEO pack (below):

However, when implemented carefully, tags can be effective in generating inbound search traffic, but follow these best practices:

- Limit your site to 5-8 general, broad categories.

- Tags should have multiple posts of a similar topic associated with them.

- Tags should be genuinely different, not replicated spamming for verbal nuance i.e. Not: “divorce laws” and “divorce law”.

- The posts should be displayed as snippets (not the entire article).

- The tag page should contain its own unique content – you can do this with the SEO Ultimate plugin.

If all of this sounds overly technical and confusing, buy a WordPress book or invest some time with someone experienced in both SEO and WordPress – leaving the tagging to the graffiti artists .